December 2024 vs December 2025: What I Got Right (and Wrong) About AI

Here’s my evaluation of the predictions I made back in December 2024 with the current state of affairs vis a vis AI. Hope you find this interesting. What were your predictions, and how did they pan out in 2025? Don’t forget to share your thoughts in the comments section!

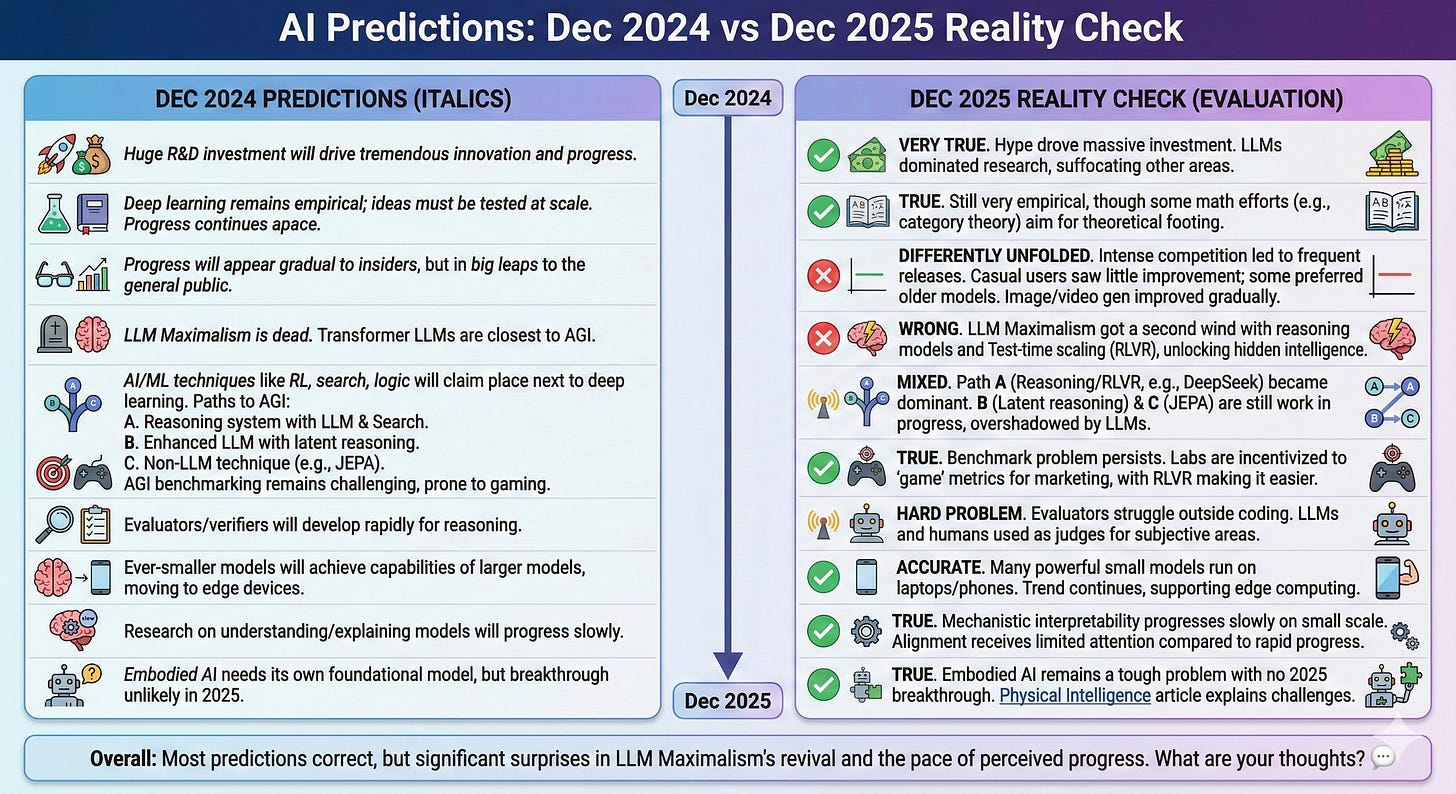

What I wrote in 2024 is in italics. You can see that I got most of the predictions correctly but there were a couple of surprises as well.

As 2024 concludes, I've been thinking about what's next for AI. Here are ten observations and predictions for 2025 - would love to hear your perspectives and what I might have missed!

1. Given the huge R&D investment going into frontier AI, we’ll continue to see tremendous amounts of innovation and progress along multiple fronts.

This turned out to be very true. Hype and expectations continued to drive investment into AI research, engineering, infrastructure, and applications. LLMs dominated research, suffocating most other areas due to a lack of attention and funding. If LLMs don’t lead us to AGI, then consider this time and effort spent as a journey “off-ramp on the way to AGI,” as Yann LeCun put it.

2. Unlike much of traditional computer science where algorithms can be analyzed on paper, deep learning remains highly empirical - ideas must be tested at scale to confirm their viability. With more known ideas than can be developed and tested in 2025, expect progress to continue at pace for several years before any slowdown.

Deep learning remains very empirical though valiant efforts are being made by a few die-hard mathematicians to put the field on a solid theoretical footing.

This book aims “to establish a principled and rigorous approach to understand deep neural networks and, more generally, intelligence itself.” Here’s a MLST video interview with Prof. Yi Ma, a co-author of the book. Here’s another video on MLST that talks about applying category theory to deep learning.

3. Still, progress will continue to appear gradual to those directly involved, and will appear to take place in occasional big leaps to the general public.

This unfolded differently than I’d envisioned. Because of the intense competition between the frontier labs, new models and features were being released to the public without a lot of holding time. New models were announced regularly with reports of better benchmark scores. Most casual users of the chatbots stopped noticing any discernible improvements, and in some cases wanted their favorite old model (OpenAI’s 4o) to be kept available. Image and video generation started to improve gradually (people consistently have 5 fingers per hand and text could finally be displayed without problems), reaching its pinnacle with Google Gemini’s Nano Banana!

4. LLM Maximalism (the idea that foundation models such as ChatGPT will lead to AGI with just more data and compute - say with version 6!) is dead. But transformer based LLMs are the closest thing to AGI we still have.

I was wrong here. LLM Maximalism got a second wind with reasoning models. Test-time scaling with Reinforcement Learning with Verifiable Rewards (RLVR) was exactly what was needed to unlock the intelligence hidden within the pre-trained model (which was created from training for next-token prediction on web-scale data). RLVR enabled code assistants to become the second killer app based on LLMs (the first was chatbots - in case you’re wondering).

5. AI/ML techniques such as Reinforcement learning, search/planning, bayesian networks and logic will claim their rightful place next to pure deep learning based architectures. The path to AGI is pursued along one of these paths:

A. A reasoning system with LLM at its core complemented by RL and Search (e.g., OpenAI’s o3). Here the LLM sits sort of separately and search happens in token space.

The ‘reasoning system’ implemented by o3, and then by all the frontier labs, turned out to be RLVR (see the previous point). A particular version of RLVR was revealed to the general public through this paper by DeepSeek, a Chinese lab.

B. An enhanced LLM with reasoning taking place within the models’ latent space (e.g., Meta’s recent COCONUT paper).

Latent reasoning is still being worked on. Perhaps we’ll see it in 2026.

C. Some other technique that doesn’t involve an LLM at all (e.g., JEPA).

JEPA is still being worked on, as are a few other architectures. With LLMs getting all attention and the investment dollars, this will likely remain this way in 2026.

6. AGI benchmarking remains challenging, as existing metrics either can be gamed or measure only necessary but insufficient conditions. While François Chollet is developing ARC-AGI-2, more effort is needed in this area.

AI continues to have a benchmark problem. I think many people, even those that have been closely following AI progress, underestimate the power of the incentive for LLM makers to target benchmark maxxing.

It's classic game theory - even though each of the frontier labs is aware that targeting benchmarks isn't very helpful for the model performance in the real-world, they have no choice but to invest in it because every model release is prominently accompanied by a benchmark scorecard.

Labs target benchmarks by training on specially created data matching test data (even if the exact benchmark tests are private, labs have access to examples and they can create more of them). RLVR makes benchmark maxxing even easier, btw.

7. Evaluators/verifiers will be developing rapidly as they are needed for reasoning and agentic systems.

Turns out this is a hard problem to solve outside of special areas like coding. LLMs (and even humans) are being used as judges in areas without definite answers. Here’s a fantastic article on the use of LLMs as evaluators.

8. Ever-smaller models will achieve capabilities currently limited to much larger models, bringing costs down and pushing more AI capabilities to edge devices.

This prediction turned out to be accurate. 2025 saw many small(er) models that can run on laptops/phones with surprisingly strong capabilities. This trend will continue into 2026 and beyond, and this promise is what supports Apple’s stock price :)

9. Research efforts (such as mechanistic interpretation) towards understanding and explaining foundational models will progress slowly given the relatively low investment. But this is an essential ingredient in addressing the alignment problem.

Mechanistic interpretability continues to progress, albeit at a very small scale. Anthropic continues to be the only major frontier lab that seems to focus a bit on the alignment problem (at least publicly), and the focus of the industry seems to be on progressing AI as quickly as possible.

10. I agree with the idea that Embodied AI needs its own foundational model, similar to how LLMs unified different natural language processing capabilities (such as translation, sentiment classification, conversation, etc.). Such a model, incorporating LLM-like knowledge and reasoning, would be crucial even for specific applications like self-driving. However, this breakthrough is unlikely in 2025.

This prediction turned out to be true. This isn’t an easy problem to solve. Here’s an article from Physical Intelligence that explains why embodied AI is such a tough problem to crack. It also gives you a glimpse of the current state of the art for robotics.