MCP Demystified: Bridging a Gap in Official Documentation

A simple and clear insight into how Claude Desktop actually uses MCP — with diagrams and an explanation you won’t find in the official docs.

«Terminology Note: Throughout this analysis, when I use the term "LLM" (Large Language Model), I’m referring to the class of foundation models that include not only text generation capabilities but also reasoning, planning, and multimodal capabilities (processing and generating images, audio, video, etc.). This expanded definition reflects the ongoing evolution of these models beyond pure language processing.

Series Note: This article is part of a series of articles I’m writing on MCP. See my blog for the complete collection.»

Anthropic’s Model Context Protocol (MCP) has gained significant traction over the past few months, but the official documentation provided on its modelcontextprotocol.io omits showing the role of LLM or conflates it with that of Claude Desktop (along with some other inconsistent terminology). This ambiguity can cause confusion, especially for new or casual readers trying to understand how MCP actually works — and can lead developers and architects to waste time muddling through with a hazy or incorrect mental model of MCP-based applications.

My goal in this article is to walk you through how things work under the hood, so that by the end, you’ll have a clearer and more accurate understanding of how MCP-based apps like Claude Desktop are designed.

(And if I’ve missed or misrepresented anything, I’d love to hear — I’ll keep this updated as things evolve.)

A Quick Overview of Model Context Protocol

Developed by Anthropic, MCP is an open protocol that is built from the ground up to help develop agentic applications powered by LLMs. Using traditional, well known techniques and best practices of software architecture, MCP enables seamless integration between LLM applications and external data sources and tools.

There are four major components of MCP:

MCP servers provide access to data sources (e.g., portfolio data, market data), tools (e.g., Filesystem, Google Maps, weather), and prompts (e.g., reusable prompt templates and workflows).

MCP clients reside within an MCP host process and connects to an MCP server.

MCP hosts run one or more MCP clients that connect with the MCP servers. MCP hosts act as both the technical orchestrators and the centralized dispatch system that enables LLMs to seamlessly interact with external data sources and tools while maintaining appropriate security boundaries.

LLMs (Large Language Models), provide the core “intelligence” for the system.

They receive prompts from the MCP hosts and generate context-aware responses.

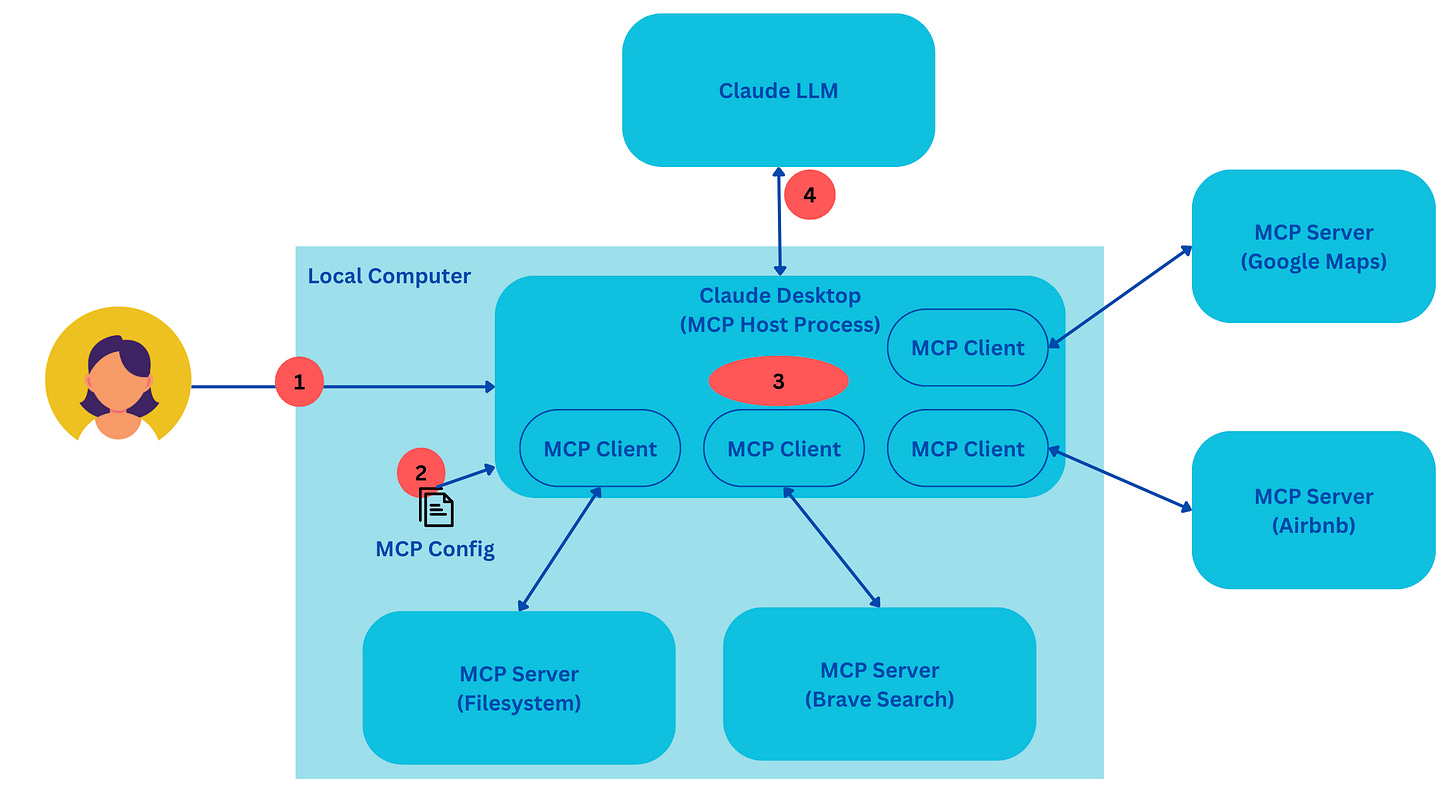

An Illustrative Example of Claude Desktop Application

To illustrate how a typical MCP application can work, I’ll show below how Claude Desktop utilizes these MCP components to provide its functions. Claude Desktop is the application that sits on user’s computer and allows them to chat with one of Anthropic’s LLMs. It maintains their chat session context, chat history, and integrates with MCP based tools to enable the LLM’s agentic capabilities.

Initialization

User launches Claude Desktop application (the MCP Host Process).

Claude Desktop loads its configuration files either from local storage or from some remote config which include details about each MCP server (e.g. endpoints, authentication tokens, usage parameters, etc.

For every MCP Server in the config (Google Maps, Airbnb, Brave Search, Filesystem, or any other registered tool), Claude Desktop

loads the server’s metadata (e.g. what capabilities it offers, how to call it, what parameters are needed, etc.).

instantiates a small “MCP Client” inside the Claude Desktop process for each server.

Claude Desktop then sends the information about each tool to one of Anthropic’s LLM, say Claude 3.7 Sonnet. The LLM is essentially told, “Here are the available MCP servers (tools). Here’s how you can call them, which parameters they expect, and what they return.”

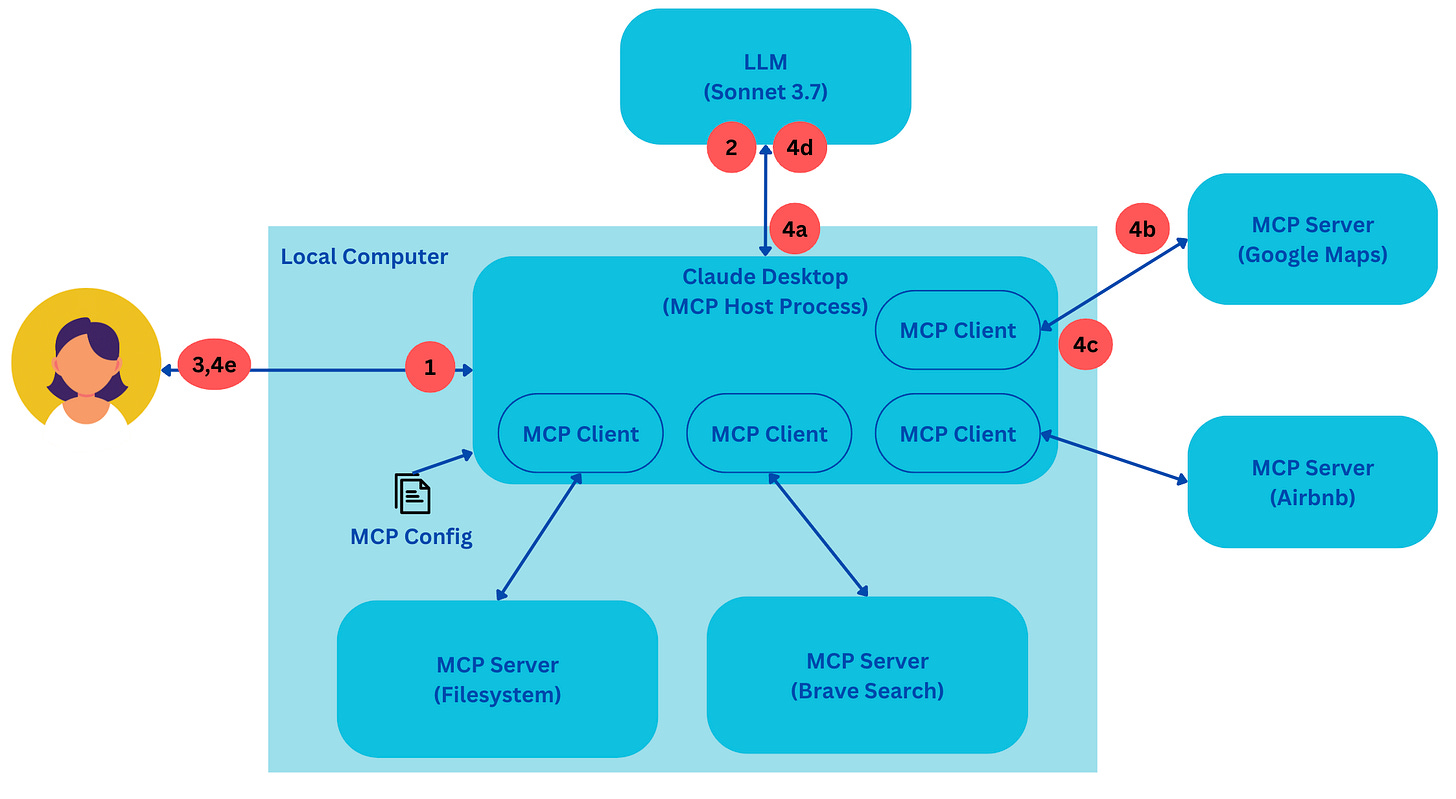

User Interaction with Claude Desktop

The user enters a prompt or message. Claude Desktop passes the prompt to the LLM.

The LLM analyzes the user’s message in the context of the metadata about the available MCP servers. The LLM checks if it can answer the question on its own or if it needs to invoke one or more of these servers (for tools/resources/prompts).

If the LLM doesn’t need external information, it sends the answering response to Claude Desktop which then displays the final answer back on the user interfaces.

If the LLM does need information or to perform an action (e.g. searching, looking up a route, checking Airbnb listings, reading a local file, etc.):

The LLMs send a response to Claude Desktop specifying which MCP server to invoke and with which parameters.

Claude Desktop (the “Host Process”) routes that request via the correct MCP client to the correct MCP Server.

The MCP Server (e.g. Google Maps) processes the request and returns the result to Claude Desktop.

Claude Desktop passes the result to the LLM.

The LLM uses this information to form an appropriate final answer to the user, and sends it back to Claude Desktop which displays it on the user interface.

Couple More Key Takeaways

A very important part of this architecture is the separation of concerns that enable implementation of guardrails and security controls. For example, Claude Desktop which is running with the user’s privileges can get explicit approvals from the user before invoking a server. Servers, on the other hand, can validate that users have appropriate privileges to make their requests. In addition, the host app/clients can validate the results returned by the LLMs and/or servers for additional business validation (not implemented by Claude Desktop at the moment).

At a high-level, I also find it useful to think of the LLMs as ‘business/user orchestrators’ and the hosts as ‘technical/system orchestrators.’

Conclusion

For developers and architects building LLM-powered applications, understanding the true mechanics of MCP is crucial. Rather than viewing it as a black box, recognizing the distinct roles and interactions of each component — the host, the client, the server, and the LLM — enables clearer implementations, tighter security, and more powerful user experiences.

As MCP evolves and gains broader adoption, its architectural model may well become a foundational pattern for building the next generation of agentic applications.