Why the Future of Agentic AI Won’t Be Chained: The Case for LLM-Driven Agents

Predicting the shift from developer-defined chains to LLM-driven orchestration.

«Terminology Note: Throughout this analysis, when I use the term "LLM" (Large Language Model), I’m referring to the class of foundation models that include not only text generation capabilities but also reasoning, planning, and multimodal capabilities (processing and generating images, audio, video, etc.). This expanded definition reflects the ongoing evolution of these models beyond pure language processing.

Series Note: This article is part of a series of articles I’m writing on MCP. See my blog for the complete collection.»

Introduction

Model Context Protocol (MCP) has been deservedly receiving significant attention lately. Anthropic calls MCP the "USB-C port for AI applications" in the sense that it provides a standardized way to connect AI models to different data sources and tools. However, another aspect of MCP remains underappreciated – and this is just as important as standardization. MCP positions the LLM at the center of agentic workflows, enabling a major paradigm shift. This paradigm shift is necessary to unlock the true potential of LLM-driven agentic workflows.

Meanwhile, many developers approach MCP after having worked with more traditional frameworks that put developers in complete control, viewing MCP as just another " USB-C port for AI applications." However, while both approaches aim to use LLM capabilities for orchestration, they differ significantly in philosophy, architecture, and implementation. Traditional frameworks favor a prescriptive approach, while MCP takes a descriptive approach, letting LLMs drive the workflows. Understanding how MCP compares to established frameworks like LangChain and its workflow extension LangGraph is key to realizing its full potential. (Note that MCP has many other key architectural features such as a clean separation of concerns along with an open standards-based protocol, but covering them isn't the purpose of this article.)

In this article, I will compare the two approaches and make predictions as to which approach is likely to dominate the future. These predictions may raise a few eyebrows, but I welcome your views on what I might have missed or misunderstood.

The Two Paradigms: Prescriptive vs. Descriptive

MCP lends itself naturally to a descriptive paradigm where capabilities are described without dictating a rigid path. The LLM's intelligence drives the workflow in real time given the current context and user input. In contrast, frameworks such as LangChain/LangGraph have been used primarily for prescriptive workflows where the developer defines the path. Under this prescriptive paradigm, the LLM has limited autonomy, following a fixed structure designed in code or graph.

1. "Prescriptive, Developer-Driven" Workflows

Developers define the flow of tasks (chains or agents) in code. Each step (or node in a chain) is explicitly laid out—what input it takes, which tool it calls, what output it returns. This means the workflow is prescribed by the developers. They decide exactly which tools are available, in what order, and under which conditions they are invoked.

The framework manages control flow using conditionals, loops, and branching logic written by the developers. While these “agents” do allow some LLM-based autonomy, the agent's environment (available tools and instructions) remains largely predetermined in code.

Together, these design choices reflect a developer-driven, prescriptive workflow: the path for the LLM is structured in advance through code, and each stage or choice point is orchestrated primarily by the developer's logic. LLM’s role is typically limited to responding to an exacting prompt further constrained by a narrow context supplied by the orchestrating framework (e.g., Langchain).

2. "Descriptive, Protocol-Centric, LLM-Driven" Workflows

A protocol enables tools, data sources, and prompts to describe their capabilities. An LLM is told the capabilities at its disposal and uses these capabilities appropriately without needing a pre-coded workflow. This architecture is descriptive: each capability "announces" what it can do, and the LLM decides how (or whether) to use it.

The LLM is effectively "in charge." It reads descriptions of available endpoints, interprets user requests, and decides which capability to call next—no fixed sequence is imposed by the developer. Control flow emerges from the LLM's reasoning, rather than being prescribed in a Python script or graph.

New capability endpoints can appear at any time, and as long as they adhere to the MCP protocol, the LLM can discover and use them. This approach leverages LLM intelligence to figure out the best sequence or combination of capabilities for any given user query, making the workflow LLM-driven and adaptive.

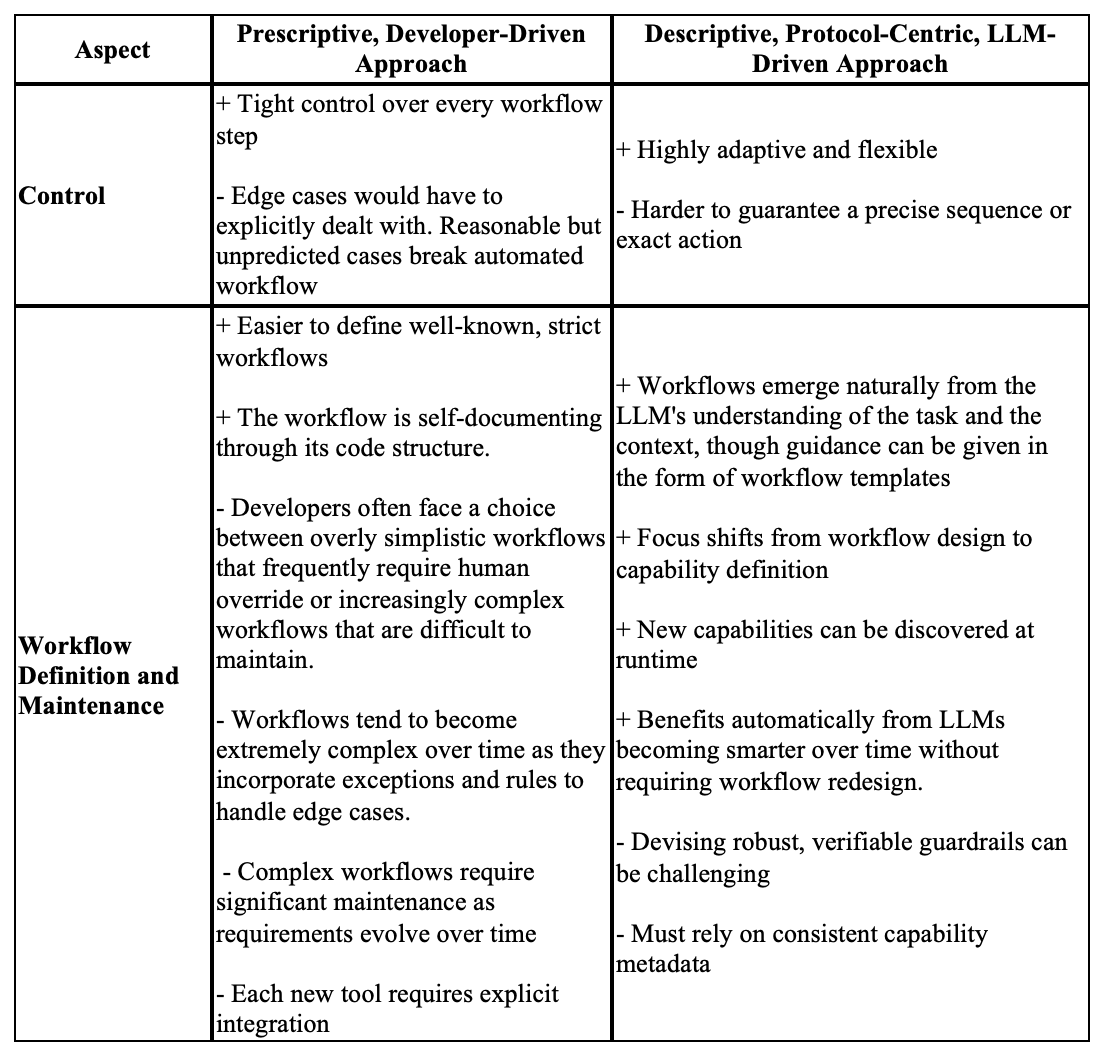

A comparison of Strengths and Limitations of the two paradigms:

Long-Term Strategic Prediction: Why the Descriptive Paradigm Will Likely Prevail

I predict that LLM-driven protocols like MCP that allow for descriptive approaches will ultimately emerge as the dominant paradigm for agentic orchestration in the medium to long term. Though significant effort will be needed to mature the implementation patterns for guardrails, workflow templating, and testing techniques.

The Case for the Descriptive Paradigm as the Long-Term Winner

1. Alignment with AI's Evolution Toward Greater Agency: As LLMs continue to advance in reasoning capabilities, the fundamental limitation will increasingly be the rigidity of the systems around them rather than the models themselves. Descriptive paradigms that empower the LLM to make orchestration decisions align more naturally with the trajectory of AI development toward greater agency and reasoning.

2. Scalability Across the Capability Explosion: If the past few months are any indicator, the coming years will see an explosion in the number and variety of specialized AI capabilities, tools, and services that support MCP natively. As the number of capabilities grows linearly, the number of possible workflows grows combinatorially. Explicitly programming all these workflows becomes untenable in a prescriptive approach.

3. Developer Experience and Productivity: while frameworks like LangChain offer familiar paradigms for developers, the long-term productivity advantages favor MCP like approaches. Clear separation between capability implementation (e.g., tools, resources, and prompts) and orchestration logic creates better modularity. This allows developers to focus on building and improving individual capabilities rather than maintaining complex workflow graphs. New capabilities can be added or enhanced incrementally without redesigning entire workflows.

4. Adaptability to User Needs: a long-term advantage of LLM-driven descriptive approaches is their ability to adapt to the infinite variability of user needs. Users can express their goals in natural language rather than conforming to predefined paths. The system can dynamically adapt based on the specific context of each interaction. And the LLM can incorporate user preferences expressed conversationally without requiring explicit personalization logic.

Counter-Arguments and Limitations

Despite these advantages, there are legitimate concerns about LLM-driven descriptive approaches that must be addressed:

1. Control and Reliability Concerns: The primary argument against LLM-driven approaches is the risk of unpredictable behavior when the LLM makes orchestration decisions. For regulated domains like finance or healthcare, organizations need guaranteed execution of critical compliance steps. It's difficult to test all possible paths an LLM might take through a set of capabilities. Handling errors gracefully is more complex when the workflow isn't explicitly defined.

2. Performance Considerations: Component-based approaches can potentially optimize performance in ways that dynamic orchestration cannot. Developers can create optimized sequences that minimize latency and resource usage. Explicit workflow definitions make it easier to identify opportunities for parallel execution.

3. Evolution of Prescriptive Frameworks: As both ecosystems evolve, we're likely to see convergence in some areas. LangChain/LangGraphic can support increasing use of LLMs to dynamically select between predefined subgraphs or to modify graph structure at runtime. I’m sure there’ll be efforts to allow these frameworks to be used with MCP.

Long Term Architecture and Transition Timelines

The most likely long-term outcome is an architecture where:

the core architecture remains protocol-centric, with clear separation of responsibilities, and standardized interfaces for capability discovery and invocation.

developers can define recommended workflow templates that guide but don't constrain the LLM.

instead of rigid workflows validated with traditional testing, the system provides sophisticated guardrails that ensure critical requirements are met while allowing flexibility within those constraints.

organizations can dial the level of LLM autonomy based on domain requirements, starting with more constrained workflows and gradually enabling more dynamic orchestration as trust builds.

The transition will likely follow this pattern:

Short-term (1-2 years): Prescriptive frameworks like LangChain/LangGraph will continue to dominate due to developer familiarity and concerns around the reliability of LLMs, especially for domains with strict, well-defined processes and high regulatory scrutiny. However, firms will likely try descriptive, MCP-like, solutions for use cases where the flexibility and power of a descriptive framework can be naturally paired with a human in the loop.

Medium-term (2-5 years): As the industry’s experience grows in adapting MCP-like architectures (e.g., how to build workflow templates and guardrails effectively), and LLMs become more reliable and capable, the descriptive paradigm will dominate, with prescriptive approaches used selectively for special cases.

Long-term (5+ years): Will AGI eventually emerge and obviate the need for any of these discussions?

Conclusion

Both MCP and LangChain/LangGraph represent significant advancements in LLM-powered applications, but with fundamentally different philosophies. LangChain/LangGraph excels in scenarios where workflows need to be precisely defined and controlled, while MCP enables more adaptive, emergent workflows driven by the LLM's understanding of user needs and available capabilities.

The prescriptive paradigm "works around AI" while the descriptive paradigm "works with AI." The history of technology shows that flexible, adaptable architectures that take advantage of the growing sophistication of underlying technologies (LLMs in this case) tend to outlast more rigid, prescriptive approaches in the long run.